“New York Life all said they’ve never hired prompt engineers, but instead found that—to the extent better prompting skills are needed—it was an expertise that all existing employees could be trained on.”

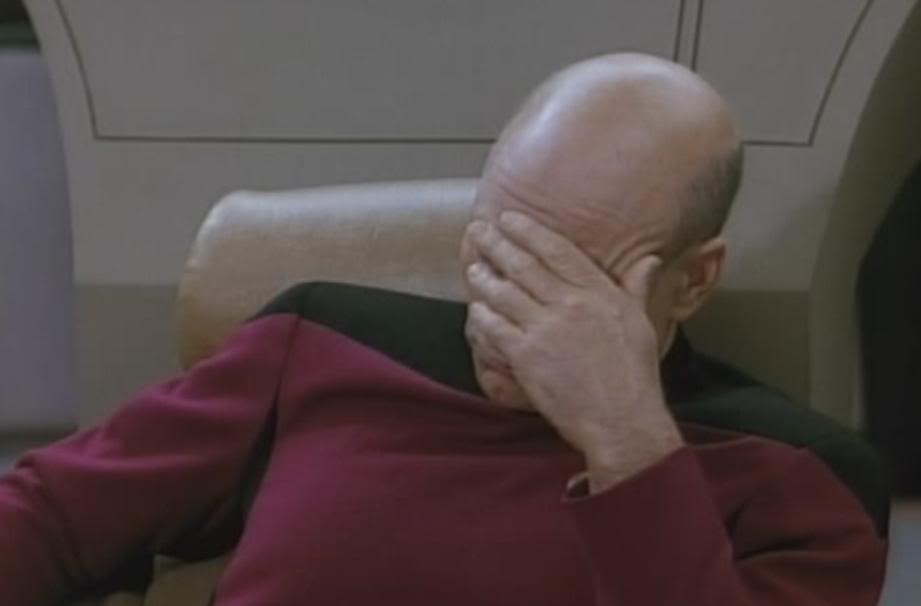

Are you telling me that the jobs invented to support a bullshit technology that lies are themselves ALSO bullshit lies?

How could this happen??

screw NewYorkLife, but LLM’s are definitely not bullshit technology. Some amount of skill in so-called ‘prompt-engineering’ makes a huge difference in using LLMs as the tool that they are. I think the big mistake people are making is using it like a search engine. I use it all the time (in a scientific field) but never in a capacity where it can ‘lie’ to me. It’s a very effective ‘assistant’ in both [simple] coding tasks and data analysis/management.

No shit.

prompt engineer

They were paying people to fucking ask it questions? A professional Google searcher?

I’m in IT. A lot of my job is Googling the answer, but I have to know what to ask and sift through what to look for that most employees won’t know.

A photographer will know what to input better than the average Joe to get a better photographic image out of ChatGPT by giving F-stops/aperture, shutter speed, ISO, lenses, bokeh/depth of field, rule of thirds, etc.

But yes… we’re getting closer and closer to George Jetson’s job of pushing one button and calling it a day.

They were paying people to try to make them answer the questions correctly because getting an LLM to do what you want it to was excruciatingly difficult just a few years back (and kinda still is).

Especially when what most companies want (factual, accurate, intelligent answers to difficult tasks or questions) is not something LLMs are actually made for (slapping words together using probability in a way that makes a reader to think it might have been written by a human).But yes. Professional google searcher, just from back in very early 2000’s when there were TV quiz shows about people being given a question and trying to find the answer as fast as possible as it was an actual special skill (an sometimes it feels like it still is, judging by how often people ask stupidly simple questions on social media)

Pretty sure lots of kids writing a paper for school are “prompt engineers”.

Yay!