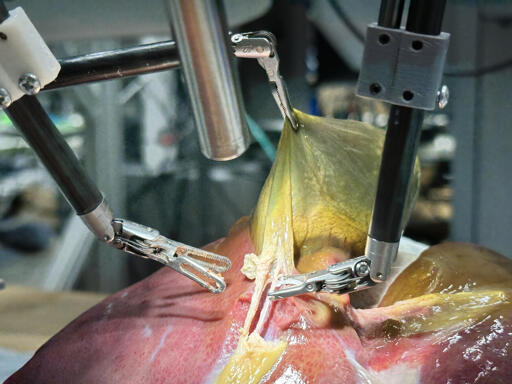

A robot trained on videos of surgeries performed a lengthy phase of a gallbladder removal without human help. The robot operated for the first time on a lifelike patient, and during the operation, responded to and learned from voice commands from the team—like a novice surgeon working with a mentor.

The robot performed unflappably across trials and with the expertise of a skilled human surgeon, even during unexpected scenarios typical in real life medical emergencies.

And then you‘re lying on the table. Unfortunately, your case is a little different than the standard surgery. Good luck.

At some point in a not very distant future, you will probably be better off with the robot/AI. As it will have wider knowledge of how to handle fringe cases than a human surgeon.

We are not there yet, but maybe in 10 years or maybe 20?Or the most common cases can be automated while the more nuanced surgeries will take the actual doctors.

The main issue with any computer is that they can’t adapt to new situations. We can infer and work through new problems. The more variables the more “new” problems. The problem with biology is there isn’t really any hard set rules, there are almost always exceptions. The amount of functional memory and computing power is ridiculous for a computer. Driving works mostly because there are straightforward rules.

I doubt it. It simply would be enough, if the AI could understand and say when it reaches its limits and hand over to a human. But that is even hard for humans as Dunning & Kruger discovered.

realistic surgery

lifelike patient

I wonder how doctors could compare this simulation to a real surgery. I’m willing to bet it’s “realistic and lifelike” in the way a 4D movie is.

Biological creatures don’t follow perfect patterns you have all sorts of unexpected things happen. I was just reading an article about someone whose entire organs are mirrored from the average person.

Nothing about humans is “standard”.

I wonder how doctors could compare this simulation to a real surgery. I’m willing to bet it’s “realistic and lifelike” in the way a 4D movie is.

I think “lifelike” in this context means a dead human. The robot was originally trained on pigs.

The article mentions that previously they used pig cadavers with dyes and specially marked tissues to guide the robot. While it doesn’t specify exactly what the “lifelike patient” is, to me the article reads like they’re still using a pig cadaver just without those aids.

Right I’m sure a bunch of arm chair docs on lemme are totally more knowledgeable and have more understanding of all this and their needed procedures than actual licensed doctors.

More than the doctors? No, absolutely not.

More than the bean counters who want to replace these doctors with unsupervised robots? I’m a lot more confident on that one.

What if I’m on the table telling the truth?

That’s a different thing indeed. In your case the AI 🤖 goes wild, will strip dance and tell poor jokes (while flirting with the ventilation machine)

I assume my insides are pretty much like everyone else’s. I feel like if there was that much of a complication it would have been pretty obvious before the procedure started.

“Hey this guy had two heads, I’m sure the AI will work it out.”

deleted by creator

know what? let’s just skip the middleman and have the CEO undergo the same operation. you know like the taser company that tasers their employees.

can’t have trust in a product unless you use the product.

I understand what you are saying is intended as „if they trust their product they should use it themselves“ and I agree with that

I do think that undergoing an operation that a person doesnt need isnt ethical however

who said they won’t need it 😐

Hey boss ready for your unnecessary heart transplant just to please some random guy on the internet?

Yeah so let’s get this done I’ve got a meeting in 2 hours.

Just like how we jail every surgeon that does something wrong

Nah, just a thorough reproduction of the consequences of that wrong.

Inb4 someone added Texas Chainsaw Massacre and Saw to the training data.

Then it saw Inner Space and invented nanobots. So you win some, you lose some.

without human help

…

responded to and learned from voice commands from the team

🤨🤔

They should have specified “without physical human help.”

You underestimate the demands on a surgeon’s body to perform surgery. This makes it much less prone to tiredness, mistakes, or even if the surgeon is physically incapable in any way of continuing life saving surgery

That’s absolutely not the point. I was criticizing the journalism, not technology. 🙄

I want that thing where a light “paints” over wounds and they heal.

thank you for removing my gallbladder robot, but i had a brain tumor

Not fair. A robot can watch videos and perform surgery but when I do it I’m called a “monster” and “quack”.

But seriously, this robot surgeon still needs a surgeon to chaperone so what’s being gained or saved? It’s just surgery with extra steps. This has the same execution as RoboTaxis (which also have a human onboard for emergencies) and those things are rightly being called a nightmare. What separates this from that?

It can’t sneeze

Human flaw. A surgeon doesnt require steady hands. So if they were in any way damaged they could still continue being a surgeon.

so this helps with costs right? right? 🥺🤔🤨

It helps the capitalists’ profit margins 😊😊😊

I know, I’m over here trying to light little fires LoL JK but yeah for sure never see reduced costs

Oh I get it, trust. I’m sure we’re both equally mad about it lol

AI and robotics are coming for the highest paid jobs first. The attack on education is much more sinister than you think. We are approaching an era where many thinking and high cost labor fields will be eliminated. This attack on education is because the plan is to replace it all with AI.

It is pretty sickening really to think of a world where your AI teacher supplied by Zombie Twitter will teach history lessons to young pupils about whether or not the Holocaust is real. I am not making this shit up.

This is no longer about wars against nations. This has become the war for the human mind and billionaires just found the cheat code.

So are we fully abandoning reason based robots?

Is the future gonna just be things that guess but just keep getting better at guessing?

I’m disappointed in the future.

reason based robots

What’s that?

That’s all people are too, though.

“OMG it was supposed to take out my LEFT kidney! I’m gonna die!!!”

“Oops, the surgeon in the training video took out a Right kidney. Uhh… sorry.”

Really hope they tried it on a grape first at least.

Okay but why? No thank you.

Naturally as this kind of thing moves into use on actual people it will be used on the wealthiest and most connected among us in equal measure to us lowly plebs right…right?

Are you kidding!? It’ll be rolled out to poor people first! (gotta iron out the last of the bugs somehow)

My son’s surgeon told me about the evolution of one particular cardiac procedure. Most of the “good” doctors were laying many stitches in a tight fashion while the “lazy” doctors laid down fewer stitches a bit looser. Turns out that the patients of the “lazy” doctors had a better recovery rate so now that’s the standard procedure.

Sometimes divergent behaviors can actually lead to better behavior. An AI surgeon that is “lazy” probably wouldn’t exist and engineers would probably stamp out that behavior before it even got to the OR.

That’s just one case of professional laziness in an entire ocean of medical horror stories caused by the same.

Eliminating room for error, not to say AI is flawless but that is the goal in most cases, is a good way to never learn anything new. I don’t completely dislike this idea but I’m sure it will be driven towards cutting costs, not saving lives.

Or more likely they weren’t actually being lazy, they knew they needed to leave room for swelling and healing. The surgeons that did tight stitches thought theirs was better because it looked better immediately after the surgery.

Surgeons are actually pretty well known for being arrogant, and claiming anyone who doesn’t do their neat and tight stitching is lazy is completely on brand for people like that.

i mean, you could just as easily say professors and university would stamp those habits out of human doctors, but, as we can see… they don’t.

just because an intelligence was engineered doesn’t mean it’s incapable of divergent behaviors, nor does it mean the ones it displays are of intrinsically lesser quality than those a human in the same scenario might exhibit. i don’t understand this POV you have because it’s the direct opposite of what most people complain about with machine learning tools… first they’re too non-deterministic to such a degree as to be useless, but now they’re so deterministic as to be entirely incapable of diverging their habits?

digressing over how i just kind of disagree with your overall premise (that’s okay that’s allowed on the internet and we can continue not hating each other!), i just kind of find this “contradiction,” if you can even call it that, pretty funny to see pop up out in the wild.

thanks for sharing the anecdote about the cardiac procedure, that’s quite interesting. if it isn’t too personal to ask, would you happen to know the specific procedure implicated here?

Not specifically but I think the guidance is applicable to most incisions of the heart. I think the fact that it’s a muscular and constantly moving organ makes it differently than something like an epidermal stitch.

And my post isn’t to say “all mistakes are good” but that invariablity can lead to stagnation. AI doesn’t do things the same way every single time but it also doesn’t aim to “experiment” as a way to grow or to self-reflect on its own efficacy (which could lead to model collapse). That’s almost at the level of sentience.

If we go by that logic, some worker from your supermarket should be able to do surgeries

Doctors have to learns this much so they can handle most really unusual stuff, not because they have to know this for a standard surgery.

That’s ridiculous. Everyone knows that for a robot to perform an operation like this safely, it needs human-written code and a LiDAR.