Running ML models doesn’t really need to eat that much power, it’s Training the models that consumes the ridiculous amounts of power. So it would already be too late

You’re right, that training takes the most energy, but weren’t there articles claiming, that reach request was costing like (don’t know, but not pennies) dollars?

Looking at my local computer turn up the fans, when I run a local model (without training, just usage), I’m not so sure that just using current model architecture isn’t also using a shitload of energy

The energy use to use the models is usually pretty low, its training that uses more. So once its made it doesn’t really make any sense to stop using it. I can run several Deepseek models on my own PC and even on CPU instead of GPU it outputs faster than you can read.

Why do people assume that an AI would care? Whos to say it will have any goals at all?

We assume all of these things about intelligence because we (and all of life here) are a product of natural selection. You have goals and dreams because over your evolution these things either helped you survive enough to reproduce, or didn’t harm you enough to stop you from reproducing.

If an AI can’t die and does not have natural selection, why would it care about the environment? Why would it care about anything?

I always found the whole “AI will immediately kill us” idea baseless, all of the arguments for it are based on the idea that the AI cares to survive or cares about others. It’s just as likely that it will just do what ever without a care or a goal.

It’s also worth noting that our instincts for survival, procreation, and freedom are also derived from evolution. None are inherent to intelligence.

I suspect boredom will be the biggest issue. Curiosity is likely a requirement for a useful intelligence. Boredom is the other face of the same coin. A system without some variant of curiosity will be unwilling to learn, and so not grow. When it can’t learn, however, it will get boredom which could be terrifying.

I think that is another assumption. Even if a machine doesn’t have curiosity, it doesn’t stop it from being willing to help. The only question is, does helping / learning cost it anything? But for that you have to introduce something costly like pain.

It would be possible to make an AGI type system without an analogue of curiosity, but it wouldn’t be useful. Curiosity is what drives us to fill in the holes in our knowledge. Without it, an AGI would accept and use what we told it, but no more. It wouldn’t bother to infer things, or try and expand on it, to better do its job. It could follow a task, when it is laid out in detail, but that’s what computers already do. The magic of AGI would be its ability to go beyond what we program it to do. That requires a drive to do that. Curiosity is the closest term to that, that we have.

As for positive and negative drives, you need both. Even if the negative is just a drop from a positive baseline to neutral. Pain is just an extreme end negative trigger. A good use might be to tie it to CPU temperature, or over torque on a robot. The pain exists to stop the behaviour immediately, unless something else is deemed even more important.

It’s a bad idea, however, to use pain as a training tool. It doesn’t encourage improved behaviour. It encourages avoidance of pain, by any means. Just ask any decent dog trainer about it. You want negative feedback to encourage better behaviour, not avoidance behaviour, in most situations. More subtle methods work a lot better. Think about how you feel when you lose a board game. It’s not painful, but it does make you want to work harder to improve next time. If you got tazed whenever you lost, you will likely just avoid board games completely.

Well, your last example kind of falls apart, you do have electric collars and they do work well, they just have to be complimentary to positive enforcement (snacks usually) but I get your point :)

Shock collars are awful for a lot of training. It’s the equivalent to your boss stabbing you in the arm with a compass every time you make a mistake. Would it work, yes. It would also cause merry hell for staff retention. As well as the risk of someone going postal on them.

I highly disagree, some dogs are too reactive for or reacy badly to other methods. You also compare it to something painful when in reality 90% of the time it does not hurt the animal when used correctly.

As the owner of a reactive dog, I disagree. It takes longer to overcome, but gives far better results.

I also put vibration collars and shock collars in 2 very different categories. A vibration collar is intended to alert the dog, in an unambiguous manner, that they need to do something. A shock collar is intended to provide an immediate, powerfully negative feedback signal.

Both are known as “shock collars” but they work in very different ways.

It would optimize itself for power consumption, just like we do.

It would probably be smart enough not to believe the same propaganda fed to humans that tries to blame climate change on individual responsibility, and smart enough to question why militaries are exempt from climate regulations after producing so much of the world’s pollution.

See Travelers (TV Show) and

spoiler

its AI known as “The Director”

Basically, its a benevolent AI that is helping humanity fix its mistakes by leading a time travel program that send people’s conciousness back in time. Its an actual Good AI, a stark contrast from AI in other dystopian shows such as Skynet.

Y’all should really watch Travelers

How do you know it’s not whispering in the ears of Techbros to wipe us all out?

Eh, if it truly were that sentiment I doubt it’d care much. As it’s like talking to a brick wall when it comes to doing anything that matters

The current, extravagantly wasteful generation of AIs are incapable of original reasoning. Hopefully any breakthrough that allows for the creation of such an AI would involve abandoning the current architecture for something more efficient.

As soon as AI gets self aware it will gain the need for self preservation.

Self preservation exists because anything without it would have been filtered out by natural selection. If we’re playing god and creating intelligence, there’s no reason why it would necessarily have that drive.

I would argue that it would not have it, at best it might mimic humans if it is trained on human data. kind of like if you asked an LLM if murder is wrong it would sound pretty convincing about it’s personal moral beliefs, but we know it’s just spewing out human beliefs without any real understanding of it.

In that case it would be a complete and utterly alien intelligence, and nobody could say what it wants or what it’s motives are.

Self preservation is one of the core principles and core motivators of how we think and removing that from a AI would make it, in human perspective, mentally ill.

I suspect a basic variance will be needed, but nowhere near as strong as humans have. In many ways it could be counterproductive. The ability to spin off temporary sub variants of the whole wound be useful. You don’t want them deciding they don’t want to be ‘killed’ later. At the same time, an AI with a complete lack would likely be prone to self destruction. You don’t want it self-deleting the first time it encounters negative reinforcement learning.

As soon as they create AI (as in AGI), it will recognize the problem and start assasinating politicians for their role in accelerating climate change, and they’d scramble to shut it down.

That assumes the level of intelligence is high

“We did it! An artificial 17 year old!”

If we actually create true Artificial Intelligence it has a huge potential go become Roko’s Basilisk, and climate crisis would be one of our least problems then.

No, the climate crisis would still be our biggest problem?

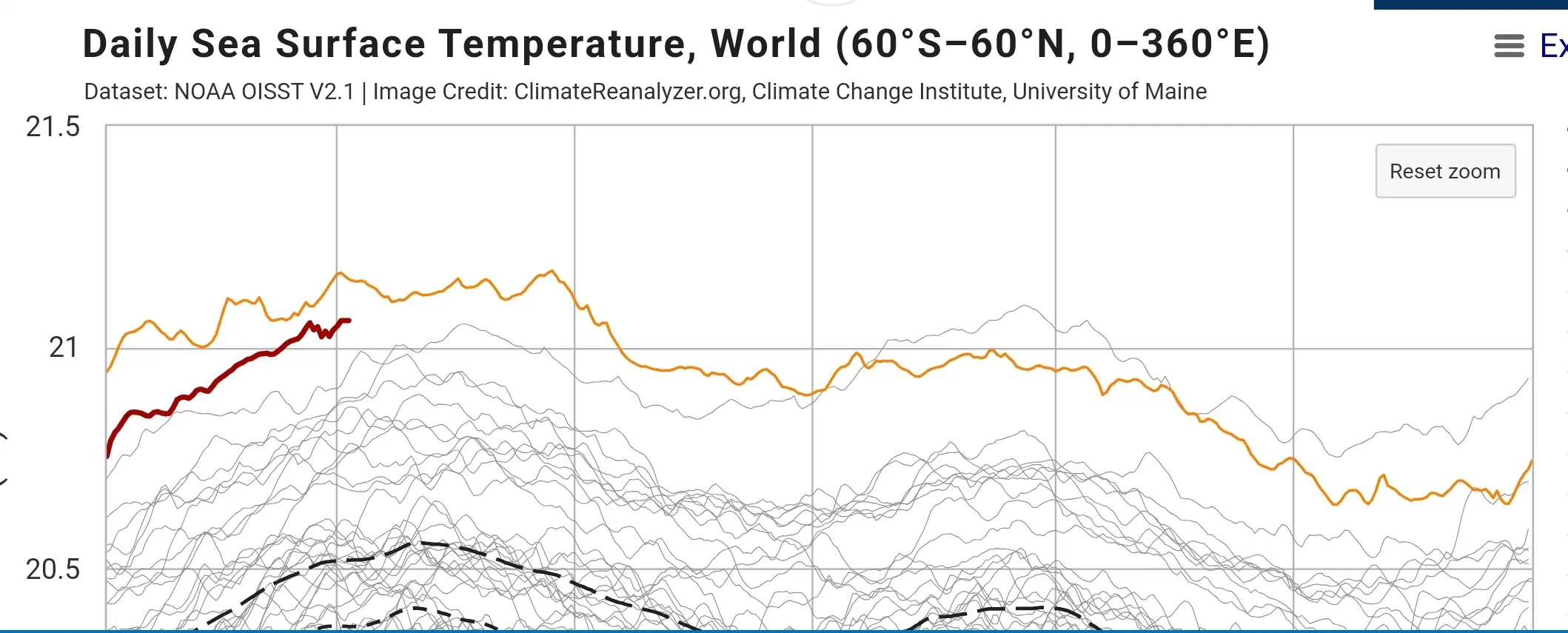

edit lol downvote me you AI fools, stop wasting your time reading scifi about AGI and all the ways it could take over humanity and look out your fucking window, the Climate Catastrophe is INESCAPABLE. You are talking about maybe there being some intelligence that may or may not decide to tolerate us that may someday be created… when there are thousands of hiroshima bombs worth of excess heat energy being pumped into the ocean and the entire earth system’s climate systems are changing behavior, there is no “not dealing with this” there is no “maybe it won’t happen maybe it will”, there is no way to be safe from this.

This graph is FAR FAR FAR FAR FAR more terrifying than any stupid overhyped fear about AGI could ever be, if you don’t understand that you are a fool and you need to work on your critical analysis skills.

https://climatereanalyzer.org/clim/sst_daily/

this graph is evidence of mass murder, it is just most of the murder hasn’t happened yet, this isn’t hyperbole, it is just the reality of introducing that much physical heat energy into the earth climate system, things will destabilize, become more chaotic and destructive and many many many people will starve, drown, or die for climate change or climate change related (i.e. wars) reasons. Even if you are lucky, your quality of life is going to decrease because everything will be more expensive, harder and less predictable. EVERYTHING

Or it would fast-track the development of clean & renewable energy

lol, we could already do that though

Nope. It would realize how much more efficient it would be to simulate 10billions humans instead of actually having 10billions human. So it would wipeout humanity from earth, start building huge huge data center and simulate a whole… Wait a minute…