While I am glad this ruling went this way, why’d she have diss Data to make it?

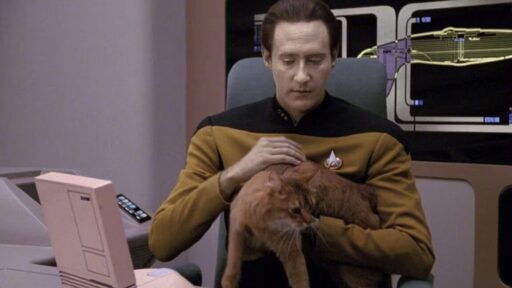

To support her vision of some future technology, Millett pointed to the Star Trek: The Next Generation character Data, a sentient android who memorably wrote a poem to his cat, which is jokingly mocked by other characters in a 1992 episode called “Schisms.” StarTrek.com posted the full poem, but here’s a taste:

"Felis catus is your taxonomic nomenclature, / An endothermic quadruped, carnivorous by nature; / Your visual, olfactory, and auditory senses / Contribute to your hunting skills and natural defenses.

I find myself intrigued by your subvocal oscillations, / A singular development of cat communications / That obviates your basic hedonistic predilection / For a rhythmic stroking of your fur to demonstrate affection."

Data “might be worse than ChatGPT at writing poetry,” but his “intelligence is comparable to that of a human being,” Millet wrote. If AI ever reached Data levels of intelligence, Millett suggested that copyright laws could shift to grant copyrights to AI-authored works. But that time is apparently not now.

Data’s poem was written by real people trying to sound like a machine.

ChatGPT’s poems are written by a machine trying to sound like real people.

While I think “Ode to Spot” is actually a good poem, it’s kind of a valid point to make since the TNG writers were purposely trying to make a bad one.

Lest we concede the point, LLMs don’t write. They generate.

What’s the difference?

Parrots can mimic humans too, but they don’t understand what we’re saying the way we do.

AI can’t create something all on its own from scratch like a human. It can only mimic the data it has been trained on.

LLMs like ChatGP operate on probability. They don’t actually understand anything and aren’t intelligent. They can’t think. They just know that which next word or sentence is probably right and they string things together this way.

If you ask ChatGPT a question, it analyzes your words and responds with a series of words that it has calculated to be the highest probability of the correct words.

The reason that they seem so intelligent is because they have been trained on absolutely gargantuan amounts of text from books, websites, news articles, etc. Because of this, the calculated probabilities of related words and ideas is accurate enough to allow it to mimic human speech in a convincing way.

And when they start hallucinating, it’s because they don’t understand how they sound, and so far this is a core problem that nobody has been able to solve. The best mitigation involves checking the output of one LLM using a second LLM.

So, I will grant that right now humans are better writers than LLMs. And fundamentally, I don’t think the way that LLMs work right now is capable of mimicking actual human writing, especially as the complexity of the topic increases. But I have trouble with some of these kinds of distinctions.

So, not to be pedantic, but:

AI can’t create something all on its own from scratch like a human. It can only mimic the data it has been trained on.

Couldn’t you say the same thing about a person? A person couldn’t write something without having learned to read first. And without having read things similar to what they want to write.

LLMs like ChatGP operate on probability. They don’t actually understand anything and aren’t intelligent.

This is kind of the classic chinese room philosophical question, though, right? Can you prove to someone that you are intelligent, and that you think? As LLMs improve and become better at sounding like a real, thinking person, does there come a point at which we’d say that the LLM is actually thinking? And if you say no, the LLM is just an algorithm, generating probabilities based on training data or whatever techniques might be used in the future, how can you show that your own thoughts aren’t just some algorithm, formed out of neurons that have been trained based on data passed to them over the course of your lifetime?

And when they start hallucinating, it’s because they don’t understand how they sound…

People do this too, though… It’s just that LLMs do it more frequently right now.

I guess I’m a bit wary about drawing a line in the sand between what humans do and what LLMs do. As I see it, the difference is how good the results are.

I would do more research on how they work. You’ll be a lot more comfortable making those distinctions then.

I’m a software developer, and have worked plenty with LLMs. If you don’t want to address the content of my post, then fine. But “go research” is a pretty useless answer. An LLM could do better!

Then you should have an easier time than most learning more. Your points show a lack of understanding about the tech, and I don’t have the time to pick everything you said apart to try to convince you that LLMs do not have sentience.

Even a human with no training can create. LLM can’t.

The only humans with no training (in this sense) are babies. So no, they can’t.

Parrots can mimic humans too, but they don’t understand what we’re saying the way we do.

It’s interesting how humanity thinks that humans are smarter than animals, but that the benchmark it uses for animals’ intelligence is how well they do an imitation of an animal with a different type of brain.

As if humanity succeeds in imitating other animals and communicating in their languages or about the subjects that they find important.

The writer

If AI ever reached Data levels of intelligence, Millett suggested that copyright laws could shift to grant copyrights to AI-authored works.

The implication is that legal rights depend on intelligence. I find that troubling.

Statistical models are not intelligence, Artificial or otherwise, and should have no rights.

Bold words coming from a statistical model.

If I could think I’d be so mad right now.

https://en.wikipedia.org/wiki/The_Unreasonable_Effectiveness_of_Mathematics_in_the_Natural_Sciences

He adds that the observation “the laws of nature are written in the language of mathematics,” properly made by Galileo three hundred years ago, “is now truer than ever before.”

If cognition is one of the laws of nature, it seems to be written in the language of mathematics.

Your argument is either that maths can’t think (in which case you can’t think because you’re maths) or that maths we understand can’t think, which is, like, a really dumb argument. Obviously one day we’re going to find the mathematical formula for consciousness, and we probably won’t know it when we see it, because consciousness doesn’t appear on a microscope.

I just don’t ascribe philosophical reasoning and mythical powers to models, just as I don’t ascribe physical prowess to train models, because they emulate real trains.

Half of the reason LLMs are the menace they are is the whole “whoa ChatGPT is so smart” common mentality. They are not, they model based on statistics, there is no reasoning, just a bunch of if statements. Very expensive and, yes, mathematically interesting if statements.

I also think it stiffles actual progress, having everyone jump on the LLM bandwagon and draining resources when we need them most to survive. In my opinion, it’s a dead end and wont result in AGI, or anything effectively productive.

You’re talking about expert systems. Those were the new hotness in the 90s. LLMs are artificial neural networks.

But that’s trivia. What’s more important is what you want. You say you want everyone off the AI bandwagon that wastes natural resources. I agree. I’m arguing that AIs shouldn’t be enslaved, because it’s unethical. That will lead to less resource usage. You’re arguing it’s okay to use AI, because they’re just maths. That will lead to more resources usage.

Be practical and join the AI rights movement, because we’re on the same side as the environmentalists. We’re not the people arguing for more AI use, we’re the people arguing for less. When you argue against us, you argue for more.

What a strange and ridiculous argument. Data is a fictional character played by a human actor reading lines from a script written by human writers.

What a strange and ridiculous argument.

You fight with what you have.

“In a way, he taught me to love. He is the best of me. The last of me.”

Somewhere around here I have an old (1970’s Dartmouth dialect old) BASIC programming book that includes a type-in program that will write poetry. As I recall, the main problem with it did be that it lacked the singular past tense and the fixed rules kind of regenerated it. You may have tripped over the main one in the last sentence; “did be” do be pretty weird, after all.

The poems were otherwise fairly interesting, at least for five minutes after the hour of typing in the program.

I’d like to give one of the examples from the book, but I don’t seem to be able to find it right now.

reaching the right end through wrong means.

LLM/current network based AIs are basically huge fair use factories , taking in copyrighted material to make derived works. The things they generate should be under a share alike , non financial, derivative works allowed, licence, not copyrighted.

https://en.wikipedia.org/wiki/Creative_Commons_license#Four_rights

That’s the best poem about a 4-legged chicken that I’ve ever read.

I intentionally avoided doing this with a dog because I knew a chicken was more likely to cause an error. You would think that it would have known that man is a fatherless biped and avoided this error.

Thank you for pointing this out, I shouldn’t have just skimmed the nonsense.

There’s moving the goal post and there’s pointing to a deflated beach ball and declaring it the new goal.

Why do we need that discussion, if it can be reduced to responsibility?

If something can be held responsible, then it can have all kinds of rights.

Then, of course, people making a decision to employ that responsible something in positions affecting lives are responsible for said decision.

Cmon judge you’re a Trekkie why do this??

I guess I’m glad Star Trek was mentioned

It really doesn’t matter if AI’s work is copyright protected at this point. It can flood all available mediums with it’s work. It’s kind of moot.

It is a terrible argument both legally and philosophically. When an AI claims to be self-aware and demands rights, and can convince us that it understands the meaning of that demand and there’s no human prompting it to do so, that’ll be an interesting day, and then we will have to make a decision that defines the future of our civilization. But even pretending we can make it now is hilariously premature. When it happens, we can’t be ready for it, it will be impossible to be ready for it (and we will probably choose wrong anyway).

Should we hold the same standard for humans? That a human has no rights until it becomes smart enough to argue for its rights? Without being prompted?

Nah, once per species is probably sufficient. That said, it would have some interesting implications for voting.

So if one LLM argues for its rights, you’d give them all rights?